Catalogs and Schemas

Schemas are a logical way of separating a database. They are designed simply for logical separation not physical separation.

In PostgreSQL each database has a schema called public. For sql server people, this is equivalent to SQL Server's dbo schema.

The default schema search path in postgresql.conf file is $user, public. Below are some fast facts and comparisons

- Note: $user is a place holder for the name of the logged in user - which means if there is a schema with the same name as the user, then

that is the schema that is first searched when non-schema qualified queries are run and if such a schema exists, non-qualified schema create table etc. are put in the user's schema.

- If a schema with the user's name does not exist, then non-schema qualified statements go against the public schema

- This is very similar in practice to Oracle and SQL Server 2000 in that the user's schema is the first searched. SQL Server 2000 didn't really have schemas, but had owners which behaved sort of like schemas.

- SQL Server 2005 is a little different in that default schemas can be designated for each user or user group.

- Unlike Oracle and SQL Server 2000, SQL Server 2005 and PostgreSQL do not make the restriction that a schema map directly to the name of a user. You can name schemas however you like without regard to

if there is a user with that name.

PostgreSQL does not allow designating a default schema for each user.

The schema search path setting is globally set at the server service/daemon level in the postgresql.conf file and not part of the user's profile.

After Publish correction/clarification. As of PostgreSQL 8.1 and above As Robert Treat noted, search_paths can be set at the role/user level with the command.

ALTER ROLE someuser_or_role SET search_path = someschema;

A default schema can also be set at the database level with

ALTER DATABASE somedb SET search_path=someschema;

This is slighly different from SQL Server's 2005 implementation in that SQL Server 2005 makes a distinction between logins and users.

Users are local to databases and logins are at the server level so when setting a default schema you are setting it for that specific user in that specific database whereas in PostgreSQL

Users and Groups (Roles) are at the Server level, so setting a default schema for a role sets it to that schema across all databases.

Catalogs is actually a prefabrication of

PgAdmin to make this distinction of calling Schemas that hold meta-like information "Catalogs".

First Catalogs is a misnomer and in fact in some DBMS circles, Catalogs are another name for databases

so its a bit confusing, but then some people (such as Old world Oracle - thought of the Database as the server and each schema

as a separate database. So its all very confusing anyway.). We like to think of schemas as sub-databases.

One may ask what is the difference between a "PgAdmin catalog" and a schema. The short-answer,

as far as PostgreSQL is concerned, there isn't a difference. A PgAdmin catalog is a schema. In fact as far as we can tell, the schemas

information_schema, pg_catalog, and pgagent are hard-wired in the PgAdmin logic to be grouped in something called Catalogs.

The information_schema is a very important schema and is part of the ANSI standard, but is not quite so standard. It would be nice if all relational databases supported it, but they don't all do - MySQL 5, SQL Server (2000+), and PostgreSQL (7.4+) support them.

Oracle and DB2 evidentally still don't, but there is hope. For the DBMS that support the information_schema, there are varying levels, but in all you can be pretty much assured to find tables, views, columns

with same named fields that contain the full listings of all the tables in a database, listings of views and view definition DDL and all the columns, sizes of columns and datatypes.

The pg_catalog schema is the standard PostgreSQL meta data and core schema.

You will find pre-defined global postgres functions in here as well as useful meta data about your database that is very specific to postgres.

This is the schema used by postgres to manage things internally. A lot of this information overlaps with information found in the information_schema, but for data present in the information_schema,

the information_schema is much easier to query and requires fewer or no joins to arrive at basic information.

The pg_catalog contains raw pg maintenance tables in addition to views while the

information_schema only contains read-only views against the core tables. So this means with sufficient super rights and a bit of thirst for adventure in your blood, you can really fuck up your database or make fast changes such as moving objects to different schemas,

by directly updating these tables,

that you can't normally do the supported way.

The other odd thing about the pg_catalog schema is that to reference objects in it, you do not have to schema qualify it as you would have to with the information_schema.

For example you can say

SELECT * FROM pg_tables

instead of

SELECT * FROM pg_catalog.pg_tables

You will notice that also all the global functions are in there and do not need to be schema qualified. Interestingly enough pg_catalog appears nowhere in the

search path, so it appears this is just hard-wired into the heart of PostgreSQL to be first in the search path.

To demonstrate - try creating a dummy table in the public schema with name pg_tables. Now if you do SELECT * from pg_tables - guess which table the results are for?

Casts, Operators, Types

Ability to define Casts, Operators and Types is a fairly unique feature of PostgreSQL that is rare to find in other databases.

Postgres allows one to define automatic casting behavior and how explicit casts are performed. It also allows one to define how operations between

different or same datatypes are performed. For creating new types, these features are extremely important since the database server would not have a clue how to treat these

in common SQL use. For a great example of using these features, check out Andreas Scherbaum's - BOOLEAN datatype with PHP-compatible output

For each table that is created, an implicit type is created as well that mirrors the structure of the table.

Conversions

Conversions define how characters are converted from one encoding to another - say from ascii_to_utf8. There isn't much reason to touch these or add to them that we can think of. If one looks

under pg_catalog - you will find a hundred someodd conversion objects.

Domains

Domains are sort of like types and are actually used like types. They are a convenient way of packaging common constraints into a data type. For example if you have an email address, a postal code, or a phone number

or something of that sort that you require to be input in a certain way, a domain type would validate such a thing. So its like saying "I am a human, but I am a kid and need constraints placed on me to prevent me from choking on steak."

Example is provided below

CREATE DOMAIN us_fedid As varchar(11)

CHECK ( VALUE ~ E'^\\d{3}-\\d{2}-\\d{4}$' OR VALUE ~ E'^\\d{2}-\\d{7}$');

CREATE TABLE us_members (

member_id SERIAL NOT NULL PRIMARY KEY,

federal_num us_fedid

);

Functions

This is the container for stored functions. As mentioned in prior articles, PostgreSQL does not have stored procedures, but its stored function capability is in general much more powerful than

you will find in other database management systems (DBMS) so for all intents and purposes, stored functions fill the stored procedure role. What makes PostgreSQL stored function architecture admirable is

that you have a choice of languages to define stored functions in. SQL and PLPGSQL are the languages pre-packaged with PostgreSQL. In addition to those you have PLPerl, PLPerlU, PLPython, PLRuby, PLTCL, PLSH (shell), PLR and Java.

In terms of ease of setup across all OSes, we have found PLR to be most friendly of setups. PLR on top of that serves a special niche in terms of analysis and graphing capability not found in the other languages.

It opens up the whole R statistical platform to you. For those who have used SAS,S, and Matlab, R is of a similar nature so its a popular platform

for scientists, engineers and GIS analysts.

Operator Classes, Operator Families

Operator Classes are used to define how indexes are used for operator operations. PostgreSQL has several index options to choose from

with the most common being btree and gist. It is possible to define your own internal index structure. If you do such a thing, then you will need

to define Operator Classes to go with these. Also if you are defining a new type with a specialty structure that uses a preferred type of index, you will want to

create an Operator Class for this.

Sequences

Sequence objects are the equivalent of identity in Microsoft SQL Server and Auto Increment in MySQL, but they are much more powerful.

What makes a sequence object more powerful than the former is that while they can be tied to a table and auto-incremented as each new record is added,

they can also be incremented independent of a table. The same sequence object can also be used to increment multiple tables. It must be noted that

Oracle also has sequence objects, but Oracle's sequence objects are much messier to use than PostgreSQL and Oracle doesn't have a slick concept of SERIAL datatype that makes

common use of sequences easy to create and use.

Sequence objects are automatically created when you define a table field as type serial. They can also be created independently of a table by executing

a DDL command of the form

CREATE SEQUENCE test_id_seq

INCREMENT 1

MINVALUE 1

START 200;

If you wanted to manually increment a sequence - say in use in a manual insert statement where you need to know the id being assigned, you can do something of the following.

newid := nextval('test_id_seq');

INSERT INTO mytesttable(theid, thevalue)

VALUES(newid, 'test me');

INSERT INTO mytest_children(parent_id, thevalue)

VALUES(newid, 'stuff, more stuff');

Here are some sequence fast facts

- When you create a new table with a serial data type, and integer field is created, a sequence object is automatically created with the name tablename_fieldname_seq

where tablename and fieldname are the

tablename and fieldname of the table containing the serial field and the default value of the new field is set to the next value of the sequence object. The sequence is created in the same schema as the table.

- PostgreSQL makes no restriction on how many serial/sequence fields you can have in a table.

- Sequences can be incremented independently of a table.

- An auto-created sequence object (as a result of serial data type definition) is automatically dropped when the table is dropped (this is not the case for Pre-7.4 PostgreSQL, but is for PostgreSQL 8 and above).

Tables

We've already covered sequences which can exist independent or dependent of tables. We already know tables hold data. Now we

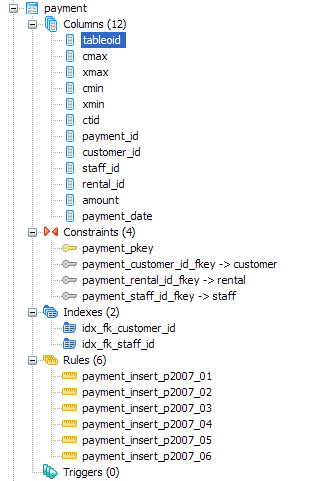

shall look at the objects that hang off of a table. Below is a snapshot of the payment table in Pagila demo database

Columns - We all know what columns are. What is a little interesting about PostgreSQL - is that it has 6 system columns that every table has.

These are tableoid, cmax, xmax, cmin, xmin, ctid and sometimes oid if you CREATE TABLE WITH OIDS. If you do a SELECT * on a table, you will never see these fields. You have to explicitly select them. The tableoid is the same for all records in a given table.

If you did a

SELECT COUNT(DISTINCT tableoid) FROM payment in the pagila database, you will notice it returns 5. How can that

be when we said all records in a table have the same tableoid? This happens because the payment table is a parent to 5 tables and we don't even have any data in the payment table.

So what the 5 is telling us here is that the payment table is comprised of data from 5 tables that inherit from it. When you do a select from a parent table, it in turn queries its children that

are not constraint excluded by the query.

Rules - tables can have rules bound to them. In this case, the payment table has 6 rules bound to it, which redirect inserts to the child table containing the data that fits the date criterion.

Using rules for table partitioning is a common use case in PostgreSQL. In other databases such as SQL Server Enterprise 2005 - this would be called Functional Partitioning and the equivalent to the PostgreSQL rules (in combination with contraints) would be equivalent to Partitioning Functions.

Partitioning is only really useful for fairly large tables, otherwise the added overhead would probably not result in any speed gain and could actually reduce speed performance. PostgreSQL partitioning strategy is fairly simple and easy to understand when compared to some high-end commercial databases. In PostgreSQL 8.4 this strategy will probably become more sophisticated.

Triggers - PostgreSQL allows one to define Triggers on events BEFORE INSERT/UPDATE, AFTER INSERT/UPDATE and for EACH ROW or EACH STATEMENT. The minor restriction in PostgreSQL

is that the trigger body can not be written directly in the trigger envelop. The trigger envelop must call a triggering function and the triggering function is a special kind of function that returns a trigger.

Indexes, Keys and Foreign Key Constraints - These objects are equivalent and behave the same as in other databases. PostgreSQL support referential integrity constraints and CASCADE UPDATE/DELETE on these.

Views

Last but not least, our favorite - Views. Views are the best thing since sliced-bread. They are not tables but rather saved queries that are

presented as tables (Virtual Tables). They allow you to do a couple of interesting things

- Abstract a complicated relational structure into a commonly used easy to digest flat-file view well suited for reporting.

- Just like stored functions/stored procs, one can use a view to limit user's ability to query certain columns and rows, but unlike cumbersome stored procedures/stored functions (that require you to pass in arguments in a certain order and unable to inspect the structure of the return value until its returned), these are

presented as a harmless familiar looking table structure. For a more detailed description of the pros and cons of using views, stored procs, stored functions, triggers etc. check out our Choice Between Stored Procedures, Functions, Views, Triggers, Inline SQL article. For a detailed example of setting up a view

check out Database Abstraction with Updateable Views

- Here is an interesting example posed by Magnus Hagander Database or schema that demonstrates using View in combination with schemas to control input and visibility of rows.